Conventionally, LLMs trained for Code Generation use the code available online for training. In their paper, ‘Textbooks Are All You Need‘, Gunasekar et. al. propose a textbook-based approach, where code is filtered based on quality and then used for training.

The result, is phi-1, a model that performs better than traditionally trained code generation LLMs.

Let’s take a look at how it works.

The problem with training on online code

There are a few problems when you train the LLM on online codes found on Stackoverflow, CodeContest, etc. They are:

The codes are not often self-sustained i.e. external information is required for the code to make sense.

A lot of boilerplate code gets repeated.

Examples might not have any algorithmic value, i.e. a lot of code that is just manipulating data and passing it on without actually doing any meaningful contributions.

Working of phi-1

Phi-1 uses 3 datasets:

Filtered-code language dataset

Synthetic Textbook

Synthetic Exercises

The filtered code is obtained by prompting GPT-4 to filter based on educational quality to a student whose objective is to learn code.

Here’s an example of a positive and negative sample:

The synthetic textbook is generated using prompts that mention different levels of understanding to generate diversity.

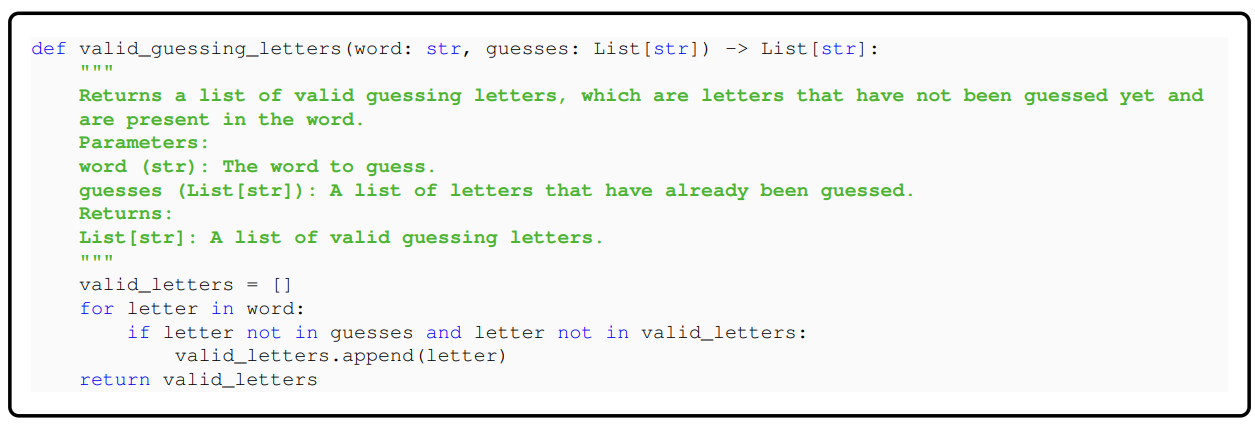

The exercises are generated by giving a doc string of the code that needs to be completed.

The training is done first on the CodeTextBook (Filtered code-language + Synthetic Textbook) to get phi-1-base. The base model is then fine-tuned on the exercises to get phi-1.

Conclusion

The authors show that phi-1 performs well on various tasks. The reason I choose to write about this paper is it shows how using small tweaks in data used for training as well as fine-tuning, superior performance can be achieved.

That’s it for this issue. I hope you found this article interesting. Until next time!

📖Resources

Attention is all you need (Other than the title inspiration, this has nothing to do with this paper. But if you haven’t read this paper, you must!)

Let’s connect :)