Generative Disco is an AI system proposed by Liu et. al. that uses large-language models and text-to-image models to generate a video from music. Think DALL.E.2 and ChatGPT combined iteratively. In this post, let’s look at how it works.

Design

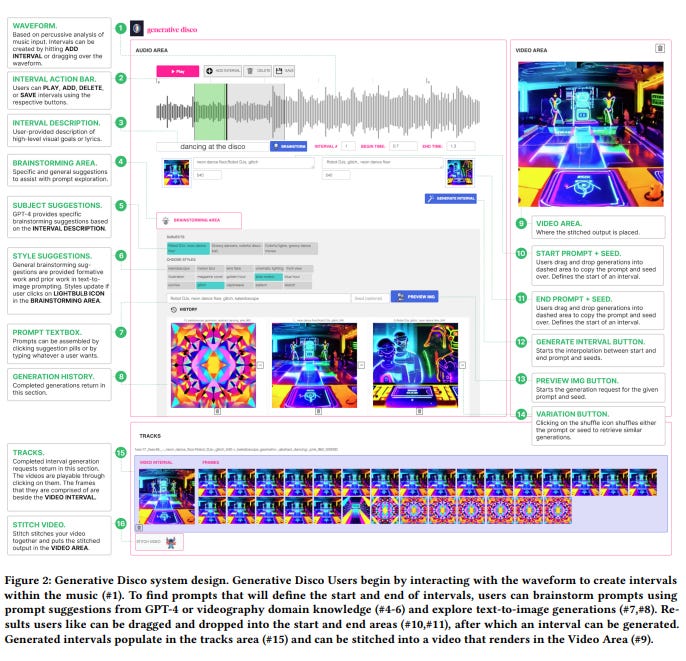

Generative AI has an algorithmic process to generate video from audio files. Below is the representation of all the steps to create a video.

These are some of the key features that I found while reading the paper:

Intervals

The process of video generation is done over user-defined intervals from a longer audio track. The users define START TIME and END TIME parameters to initialize an interval. In addition to the audio interval, the user also has to enter a high-level description of the interval which is used as the parent prompt.

An example given by the authors is after the description is entered, GPT-4 is used with the following prompt:

“In less than 5 words, describe an image for the following words {INTERVAL DESCRIPTION}.”

START & END Prompts

The video is generated by interpolating between two images that define the start and end of the interval precisely. The user gives an image as well as a text prompt. These images signify the image with which the video will start and the image where it will end.

Brainstorming area

This is an interesting add-on that provides a way for the user to generate start and end prompts based on text. Just like in text-to-image models, users can also specify a style in which the image, and hence the video should be created.

The brainstorming area auto-populates some prompts based on the response of GPT-4 on the {INTERVAL DESCRIPTION} from step 1.

Multiple intervals are then stitched to create the final video.

Examples

Conclusion

Video generation and editing have always been difficult and tedious tasks. Doing it requires deep domain knowledge in terms of various heavy applications like Adobe Premier Pro, FinalCut Pro, etc. AI can help to democratize this process by leveraging LLMs and text-to-image models. This is definitely a super early version of what future video generation and editing might look like and I am excited to see what’s next!

That’s it for this issue. I hope you found this article interesting. Until next time!

PS: Fun and sweet :)

📖Resources

Let’s connect :)