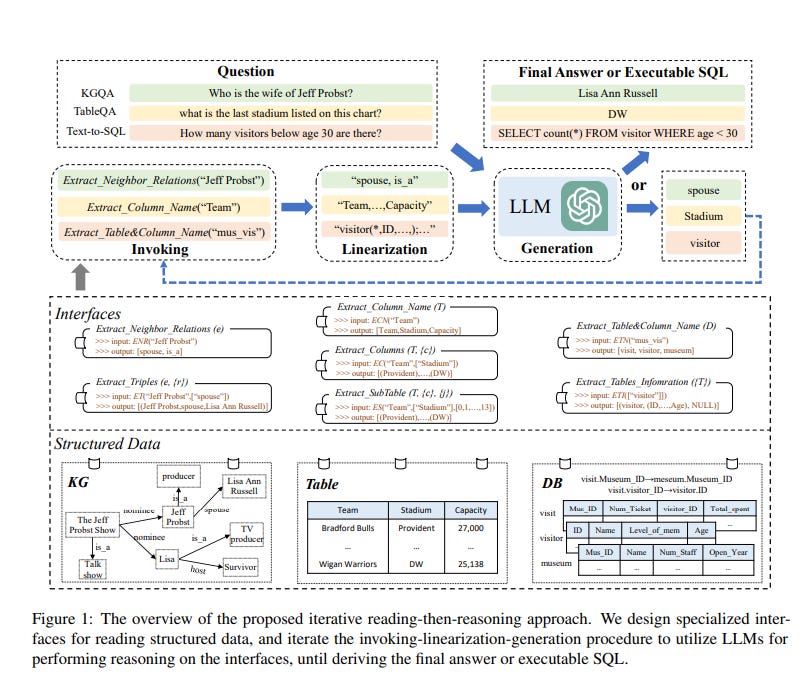

StructGPT, proposed by Jiang et. al. is an iterative read-and-reason approach to improve the zero-shot capabilities of LLMs on structured data. The proposed method improves the performance of the LLMs on graphs and databases. Traditionally, LLMs are not good at these data structures since their format is absent from the training data. Hence, the method proposed gives a way to process the structured data and convert it into LLMs ingestable format using linearization.

In this post, let’s explore how it works.

Structured Data Types

There are 3 types of data considered in this approach

Knowledge Graph

A set of triplets. Each triplet {x1, r, x2} represents there is a relation r between the head entity x1 and tail entity x2.

Data Table

A table with c columns and r rows.

Database

A set of Data Tables with some relations between the tables represented using foreign keys.

Working

Based on the 3 data types, there are 3 tasks that StructGPT can solve:

KG-based question answering (KGQA)

Setup

An intuitive approach is to start from the head entity of the query and jump along various relations that have the head as the tail of the n-1th relation. For example, if we start from with the relation {x1, r, x2}, and we also have {x2, r, x3}, we can move from x1 to x3 via x2.

The two functions used for this are:

Extract_Neighbor_Relations(e): extracts all the neighbouring relations of the entity e.

Extract_Triples (e, {r}): extracts all the triples with the relation in {r} and head entity e.

Implementation

Call Extract_Neighbor_Relations(e) to get all the one-hop relations.

Linearize the data to form text and feed it into the LLM to get useful relations from the set of all relations.

Call Extract_Tripets(e, {r}) to get all the head and tail of the useful relations.

Linearize into text, and feed to LLMs to get the answer which will be the tails of the most relevant triplets.

Table-based question answering (TableQA)

Setup

The goal here is for the LLMs to choose the relevant columns based on the query and then scan them row by row to get the exact sub-table required for generating the response. The methods used for this are:

Extract_Column_Name (T): extracts all the column names of a table T.

Extract_Columns (T, {c}): extracts the contents of columns from a table T by indices {c}.

Extract_SubTable (T, {c}, {j}): extracts the sub-table specified by the column indices {c} and row indices {j} from a table T.

Implementation

Call Extract_Column_Name (T) to get all the columns.

Linearize them to text and feed them into the LLM to get the relevant columns.

Get the content of the selected columns using Extract_Columns (T, {c}).

Get useful rows from the column content using LLM query.

Use Extract_SubTable (T, {c}, {j}) to build a new table as the final input to the LLM. The response is the answer to the user query.

DB-based semantic parsing (Text-to-SQL)

Setup

The process is similar to that of the data table, however, there is an additional step of identifying which tables to use from the DB. The methods used for this are:

Extract_Table & Column_Name (D): extracts the names of all the tables and their contained columns from the database.

Extract_Tables_Information ({T}): extracts the table names, column names, and foreign keys from a set of tables {T}.

Implementation

Get the relevant table as well as relevant columns using Extract_Table & Column_Name (D).

Call Extract_Tables_Information ({T}) to get the data from selected tables.

Linearize the data and feed it into the LLM to get the answer.

Here is an illustration of the architecture given in the paper:

Conclusion

LLMs are getting better at various tasks day by day. As seen in this paper, one of the crucial tasks that seem to occur in various fine-tuning tasks is to linearize the raw data into a format that the LLM can process. With better linearization, LLMs will be able to solve more generalized tasks efficiently.

That’s it for this issue. I hope you found this article interesting. Until next time!

📖Resources

Let’s connect :)