Recent advances in Generative AI have been groundbreaking. We had image-generation AIs such as DALL.E.2, StableDiffusion, etc. and advanced text-to-text chatbots such as ChatGPT, Replika, etc. In addition to this, we have apps like Synthesia which can generate video from a text description.

One more frontier missing from this list is music. Today’s article will explore MusicLM, Google’s framework for generating music from text.

AudioLM

MusicLM is based on AudioLM which is a framework for text-to-audio generation. The training of this model has 3 components, a tokenizer, a decoder-only transformer and a detokenizer.

The tokenizer with the decoder-only transformer creates two types of tokens: semantic tokens which represent the “meaning” or the text representation of the audio and acoustic tokens which represent the audio corresponding to the meaning.

Detokenizer then takes this representation and generates audio that is consistent with the input.

In addition to this, there is another key component of the architecture: The hierarchical relationship between the tokens. The authors have noted that when we only use semantic tokens, i.e. the representation of what the words mean, it results in a poor-quality of audio.

However, if we only use the audio tokens, the context of the input is lost for audios of longer lengths. Hence the model segments the token generation into 3 stages:

Semantic modelling is encoding the meaning of the text.

Coarse acoustic modelling embeds a generalized meaning such as the tone of the speaker, the recording condition or the environment.

Fine acoustic modelling uses the course acoustic modelling output to recover finer details lost during generalization.

MusicLM builds on this model to allow for longer audio clips, conditioning signals (similar to giving style inputs to DALLE2), and producing a higher quality of audio that is consistent with the text input for a longer time.

Let’s look at how it works

Music LM

MusicLM by Google is based on the transformer architecture, which was introduced by Vaswani et al. (2017). The model uses self-attention mechanisms to capture the relationships between musical symbols and generate music sequences that are similar in style to existing music

Overview

Music LM uses the architecture of AudioLM and adds a couple more components to it. They use a music-text embedding model similar to CLIP used by DALLE2. The music-text embeddings are created using MuLAN which uses ResNet-50 and Bert to create audio and text embeddings.

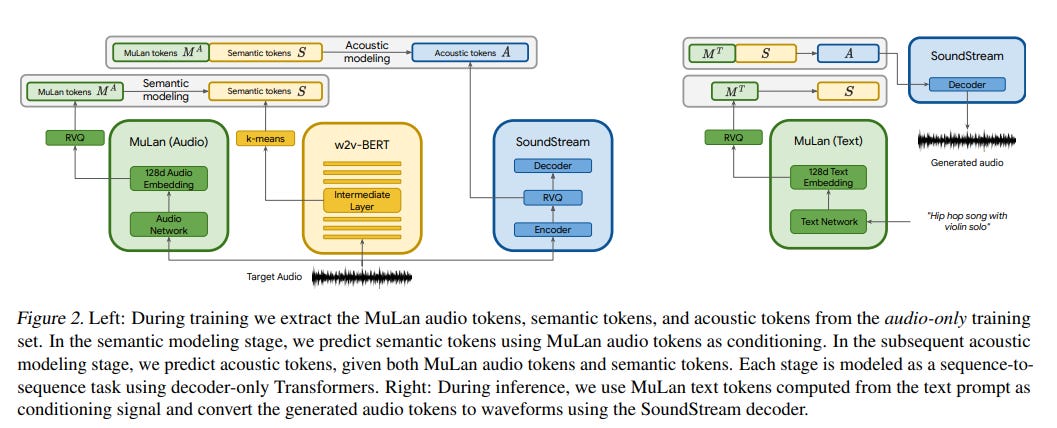

As shown in the figure above, there are 3 models used by MusicLM

Similar to AudioLM, it uses self-supervised audio representations of SoundStream to produce acoustic tokens

It uses the w2v-BERT model to generate semantic tokens.

It uses MuLAN for conditioning, i.e. adding the extra context such as style of music (classical, rock, etc.) MuLAN’s music embedding is used at training and its word embedding for inference.

Training and Inference

Let’s look at how the model is trained and how it makes inferences, which consists of two stages; Independent model training and hierarchical modelling.\

Independent model training

As shown in the figure above, there are 3 models used by MusicLM

Similar to AudioLM, it uses self-supervised audio representations of SoundStream to produce acoustic tokens

It uses the w2v-BERT model to generate semantic tokens.

It uses MuLAN for conditioning, i.e. adding the extra context such as style of music (classical, rock, etc.) MuLAN’s music embedding is used at training and its word embedding for inference

The paper has more specifics on how each of the models is trained.

Hierarchical Modelling

In addition to the independent training of the models shown above, the training phase also consists of a hierarchical (aka step-by-step) process of a decoder-only transformer that produces tokens based on past values (autoregressive)

Stage 1: Semantic Modelling

In this stage, semantic tokens are produced from the audio token of MuLAN based on the distribution p(St|S<t, MA)

Where,

St - Semantic token at time t

S<t - All the past tokens

MA - MuLAN Audio outputs

Stage 2: Acoustic Modelling

In this stage, the audio tokens are predicted using the MuLAN Audio and Semantic tokens. This stage is modelled on the following probability distribution

p(At|A<t, S, MA)

Where,

At - Audio token at time t

A<t - All the past audio tokens

S - MuLAN Semantic tokens

MA - MuLAN Audio outputs

Inference

In the inference phase, an input is mapped to a set of tokens in the joint embedding of text-audio space. This space is learnt by MuLAN in the training phase. After this using the same two stages as described above, and using these tokens instead of audio (MT instead of MA), the song is generated.

Impact

MusicLM is another tool that will reduce the time taken by professionals to generate templates when working on the ideation phase. This will help to explore more options quickly before settling and going deep on one of them.

However, as with all of the generative AI tools, MusicLM also has some potential for adverse impact on the creatives in the industry. The paper notes two main concerns:

Bias present in the training data will also be reflected in the model

Chance of plagiarism due to memorization of training data.

Hence they have mentioned that there are no plans to release this model to the public.

Conclusion

The recent boom in generative AI seems to have the potential to disrupt many industries. I feel that as with any technology that has a profound impact like the Internet, Personal Computers and now AI, even though the short-term effect might be painful for some people: due to the need to reskill and switch jobs, the long-term effect is always better lives for humans because the computers handle the routine jobs freeing us up to do the creative work.

What do you think about generative AI and its impact? Would love to know!

📖Resources

That’s it for this issue. I hope you found this article interesting. Until next time!

Connect Here: