LLMs are good at coming up with solutions to simple tasks. However, they fail at achieving complex tasks which require multiple steps and coordination between various steps. HuggingGPT is a new model proposed by Shen et. al. that uses LLMs to plan tasks, choose an appropriate model, execute the tasks, and generate a solution. In this post, we explore how it works. The model combines the ability of LLMs to deconstruct and understand human prompts and use them to plan and execute subtasks using the HuggingFace AI models.

Why is HuggingGPT required?

The authors note that despite the recent advances in LLMs, the current models lack the following abilities:

The lack of ability to process complex data such as speech, images, videos, etc.

Creating, scheduling and coordinating between multiple sub-tasks.

Not being able to perform well at specialized tasks like image recognition in comparison to fine-tuned models such as a CNN.

The authors propose using LLMs as an interface to connect different AI models and use the LLM as a brain to plan and execute the tasks required to complete the larger goal.

Model Architecture

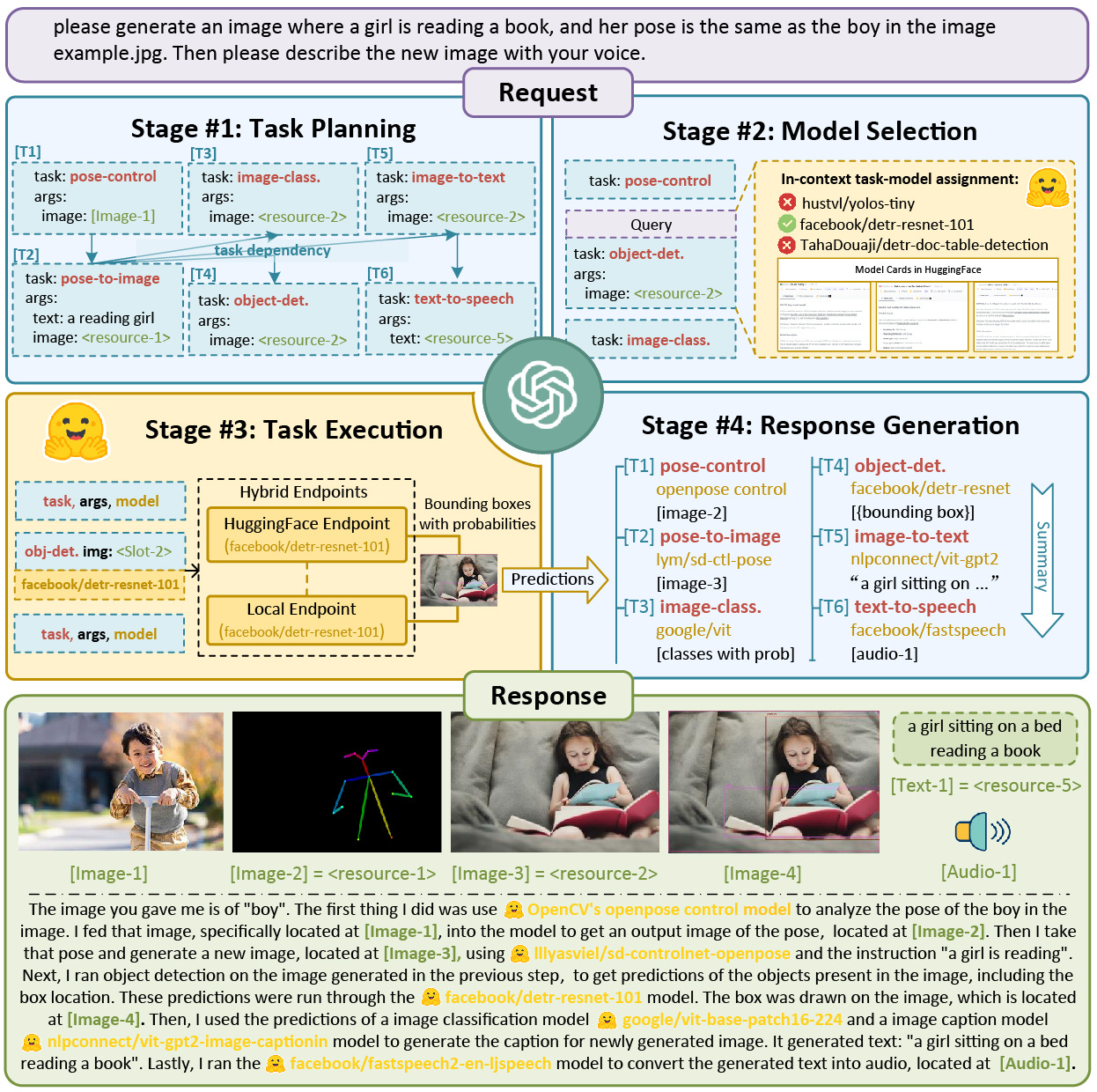

The proposed model has 4 stages:

Task Planning

Model Selection

Task Execution

Response Generation

Task Planning

In this stage, the goal is divided into sub-tasks using LLMs such as ChatGPT.

Each task object contains the following attributes:

Task ID: Unique Identifier

Task Dependencies: A list of task ids that must be completed stated before this task can be executed

Task Type: Type of current task. Here is an image showing the tasks supported by the model

Task Arguments: The data required to execute the task, like params of a function.

Model Selection

The model selection is done by using 3 things:

The model description, given by the authors of AI models in HuggingFace.

The task type.

The original user query.

The models are filtered out by task type and then by the number of downloads of the models. K top models are given to HuggigGPT. It uses these models to solve a single-choice problem based on the above parameters.

Task Execution

Tasks are executed according to dependencies and the results are stored in order.

The architecture also proposed using local models in addition to HuggingFace API to improve speed and efficiency.

Response Generation

HuggingGPT uses ChatGPT to generate a response using the output of the previous 3 stages. The Output shows all the steps taken by the model to get the final result.

Example and Code

Here is an example of the model in action:

You can find the complete code to an application called Jarvis here. Let’s take look at the driver function that integrates all the steps we have seen.

def chat_huggingface(messages, openaikey = None):

start = time.time()

#Get the messages parsed from user query

context = messages[:-1]

input = messages[-1]["content"]

logger.info("*"*80)

logger.info(f"input: {input}")

task_str = parse_task(context, input, openaikey).strip()

logger.info(task_str)

if task_str == "[]": # using LLM response for empty task

record_case(success=False, **{"input": input, "task": [], "reason": "task parsing fail: empty", "op": "chitchat"})

response = chitchat(messages, openaikey)

return {"message": response}

try:

#Get tasks STAGE 1

tasks = json.loads(task_str)

except Exception as e:

logger.debug(e)

response = chitchat(messages, openaikey)

record_case(success=False, **{"input": input, "task": task_str, "reason": "task parsing fail", "op":"chitchat"})

return {"message": response}

tasks = unfold(tasks)

tasks = fix_dep(tasks)

logger.debug(tasks)

results = {}

processes = []

tasks = tasks[:]

with multiprocessing.Manager() as manager:

d = manager.dict()

retry = 0

while True:

num_process = len(processes)

#Start Task Execution

for task in tasks:

dep = task["dep"]

if len(list(set(dep).intersection(d.keys()))) == len(dep) or dep[0] == -1:

tasks.remove(task)

#STAGE 2 and STAGE 3

#run_task function does actual model inference and task execution i.e

process = multiprocessing.Process(target=run_task, args=(input, task, d, openaikey))

process.start()

processes.append(process)

if num_process == len(processes):

time.sleep(0.5)

retry += 1

if retry > 160:

logger.debug("User has waited too long, Loop break.")

break

if len(tasks) == 0:

break

for process in processes:

process.join()

results = d.copy()

logger.debug(results)

#Generate Response STAGE 4

response = response_results(input, results, openaikey).strip()

end = time.time()

during = end - start

answer = {"message": response}

record_case(success=True, **{"input": input, "task": task_str, "results": results, "response": response, "during": during, "op":"response"})

logger.info(f"response: {response}")

return answer

I have mentioned the different stages in the comments above. In this code, the tasks are taken from a predefined demo file. The run_task function checks the dependency array (deps) and selects a model to execute the tasks.

If you want to dive in deeper, the repo link is given above.

Conclusion

Even though programs like GitHub Co-Pilot have made good strides in AI programming, it still requires a lot of human input. To build truly autonomous coding bots we need systems that can not only write the code to the specification but also execute tasks such that they are sufficiently atomic and well coordinated.

Systems like HuggingGPT will help to make coding bots more efficient and help in rapid prototyping. Hence, at least in the short term, make programmers vastly more productive.

📖Resources

PS: An official version might be available soon! 😲

That’s it for this issue. I hope you found this article interesting. Until next time!

Let’s connect :)